While regulators and legal experts are getting into the bigger accountability questions around artificial intelligence, such as who is liable if an autonomous vehicle crashes, formal governance to guide decision-making is largely lacking for everyday business application. The urgency around this is increasing: AI is being widely used across functions ranging from marketing to customer service, and ambitions for it are soaring as organisations witness its potential.

Key takeaways

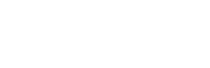

- As the use of AI increases in breadth and speed to market, business should prioritise its governance.

- Establishing a governing body, best practice playbooks and risk documentation will reduce risk and evaluation time.

- Good AI governance will allow companies to efficiently and safely use AI to realise ambitions.

While regulators and legal experts are getting into the bigger accountability questions around artificial intelligence, such as who is liable if an autonomous vehicle crashes, formal governance to guide decision-making is largely lacking for everyday business application. The urgency around this is increasing: AI is being widely used across functions ranging from marketing to customer service, and ambitions for it are soaring as organisations witness its potential.

It can be tempting to approach risk management in an ad-hoc manner each time a new idea for AI takes shape with speed being a great motivator. Ultimately, however, this leads to inefficiency as teams must start from scratch each time they evaluate risk. The possibility of missing something, such as a system’s potential for bias, is high, and can cause costly problems in the future.

Establishing appropriate governance for AI — that is, policies, processes and standards — which the whole organisation can refer to, is a positive force in enabling bold creativity. By creating a robust and repeatable framework of governance, AI activities can be implemented consistently and safely, allowing companies to successfully deliver artificial intelligence systems responsibly, every time, and at scale. In the end, the right level of structure and control can lead to lower risk for the business.

Establish a multidisciplinary

governing body

Firstly, companies should consider establishing an oversight body — with representatives from a range of different areas. These could include executive management, compliance, technology specialists and data scientists, and process owners from different functions within the business.

The organisation’s overarching strategy for AI — such as improved customer targeting, or a richer customer experience at a lower cost — should provide the context for the body’s governance. It will help determine the business’ appetite and tolerance for risk, how it may vary in different contexts, and enable the creation of an AI governance framework for the rest of the organisation. Additionally, it is important that the Board is kept informed of AI risks in order to make appropriate business strategy decisions.

The governing body for AI could be an extension of an existing governance team, or may need to be formed from scratch. An example of how an existing governance structure may be augmented or extended by adopting a standard three lines of defense risk management model.1

Create a common playbook

for all circumstances

Each application of AI will have its own risks and sensitivities that require different levels of governance. For example, learned bias in a customer recommendation engine is less likely to result in significant consumer harm than an unstable medical diagnostic AI, or one controlling an autonomous vehicle.

Therefore, governance structures should cater to many possibilities, acting as a playbook — a ‘how to’ to approach new initiatives. This includes building in flexibility for future issues. It may be helpful to categorise levels of risks together — such as financial, reputational, safety — to determine the level of rigor required. The more scrutiny, negotiation and resolution that can be done up front, the less need there will be for damage control later.

A strong central reference guide will help to frame collaboration, support negotiations between stakeholders on trade-offs between what they’d like to accomplish and tolerable levels of risk. If a highly elaborate AI application is too hard to explain to a regulator, for instance, a more straightforward capability, which still meets business requirements, might be preferable.

The playbook should include leading practice examples, which could be borrowed from lateral industries — such as finance — which are already using risk assessment in other areas of work. Complementing this information, data and model governance practices should be established, including the use of data and model inventories which will provide a reference point for those looking into the use of new AI systems.

Strong governance practices around AI will help to accelerate innovation across the business landscape while reducing the risk of a complex technology. Once companies can assess risk consistently, no matter the conditions, and determine what is needed to balance these against desired business outcomes, teams will be able to make risk-based decisions efficiently and take their AI plans to the next stage.

Develop appropriate data

and model governance processes

Data governance

If different AI models are built from the same dataset, and that data has problems— such as an inherent bias — its risk area will be significantly magnified. AI plans should therefore start with a clear picture of where data has come from, how reliable it is, and any regulatory sensitivities that might apply to its use, before being approved. Data preparation and data ‘labelling’ processes should be traceable. That is, it should be possible to show an audit trail of everything that has happened to the data over time, in the event that there is a later audit or investigation.

Model governance

Another important governance consideration is model governance, or the way an organisation oversees an AI solution from inception through deployment. Model governance provides standards and guidelines to ensure AI applications are suitable and sustainable for their intended use, and don’t expose the business to undue risk. In an artificial intelligence context, this is about how the algorithms work and make decisions. For example, what assumptions were made in creating a model or the reason that a specific model was selected.

Financial services organisations will typically already have strong model governance measures in place, for example, around how lending decisions are reached, and some of these elements will be transferable to other AI applications — for instance, where intelligent automation tools do the calculations to approve customers for borrowing.

Mobilising data and model governance

Until now, if data and model governance have existed as controls, they have been managed in isolation. Yet, in an AI context, data and model governance should be considered in tandem. Datasheets and model sheets — tools that can be used to document provenance, purpose and inform data & model inventories — can act as a helpful bridge as organisations get started.

Datasheets, which can be as simple as a shared word document, provide a standard way to record how a dataset was created, and document characteristics, motivations and potential skews it contains. They help enforce consistency across teams, and introduce a controlled business process for data curation. Having accessible central datasheets will mean new AI solutions can be reviewed quickly and efficiently for data-related risk factors.

Similarly, model sheets — be they word processing documents or more complex applications — can provide a useful first step towards model governance. They also act as a central repository for documentation, cataloguing information about the model such as validation testing results or related business requirements. These will help in reporting risk to business units and senior management.

Managing

AI governance

Getting AI governance right is not about impeding business agility. Establishing a multidisciplinary governing body for assessing AI systems is a necessary step in implementing risk controls across a business. Its approach, if positive and geared towards enablement rather than a hurdle to jump, will provide business with a springboard for future AI progress in a safe environment.

Three governance considerations to unlock AI.

This article is part of PwC’s Responsible AI initiative. Visit the Responsible AI website for further articles and information on PwC’s comprehensive suite of frameworks and toolkits and take the free PwC’s Responsible AI Diagnostic Survey.

Join PwC and the entire AI community at this year's World Summit AI in Amsterdam. View the full programme, speaker line up and book tickets by visiting worldummit.ai

World Summit AI 2019

October 9th-10th

Amsterdam, Netherlands

worldsummit.ai

.png?width=259&name=WSAI%20Amsterdam%20Orange%20no%20dates%202000x300%20(1).png)

.png?width=263&name=IM_Mothership_assets_LOGO_MINT%20(2).png)